What is the Modern Data Stack?

The modern data stack is the collection of tools and technologies used to manage, process, and analyse data in today’s digital environment.

The modern data stack is characterised by:

- A shift from traditional, monolithic data management systems to more flexible, scalable and efficient cloud-based solutions.

- The ability to handle the volume, variety, and velocity of big data, facilitating more advanced analytics, machine learning, and data-driven decision-making.

- Modular and often cloud-native, allowing companies to select the best tools for their specific needs and integrate them into a cohesive ecosystem.

- Flexibility and scalability to support a wide range of data operations, from simple analytics to complex machine learning projects, driving insights and innovation.

What’s the Biggest Difference Between the Modern Data Stack and Traditional Data Stack?

The key distinction between a modern data stack and a legacy data stack is that the modern data stack is generally hosted in the cloud and requires minimal technical configuration by the user.

These features enhance end-user accessibility and scalability, enabling rapid adaptation to growing data needs without the costly and prolonged downtime typical of scaling local server instances.

Designed with analysts and business users in mind, the components of the modern data stack allow users of all backgrounds to not only utilise these tools easily but also manage them without extensive technical knowledge.

In essence, the modern data stack reduces the technical barrier to entry for data integration.

Key Benefits of a Modern Data Stack

- Cloud-Based Hosting

The modern data stack is generally hosted in the cloud, eliminating the need for extensive on-premise infrastructure and reducing the associated maintenance costs. - Minimal Technical Configuration

Minimal technical setup makes it accessible to users without deep technical expertise and allows quick deployment and use. - Enhanced Scalability

Easily scalable to meet growing data demands without the lengthy downtime and high costs of scaling local server instances. - Flexibility

Flexible enough to support a wide range of data operations, from simple analytics to complex machine learning projects. - Modularity

Modular components enable companies to select and integrate the best tools for their specific needs into a cohesive ecosystem. - Advanced Data Handling

The modern data stack is capable of managing the volume, variety and velocity of big data, facilitating advanced analytics and machine learning. - End-User Accessibility

Designed with analysts and business users in mind, the modern data stack promotes ease of use and management without requiring extensive technical knowledge. - Reduced Technical Barrier

The lowered technical barrier to entry for data integration makes it easier for users of all backgrounds to use and administer these tools. - Support for Data-Driven Decision Making

Enables insights and innovation by facilitating more advanced analytics and data-driven decision-making processes.

The Data Stack

We’ve talked about the differences between the modern vs traditional data stacks.

But let’s take a step back and examine what a data stack really comprises.

What exactly is a data stack?

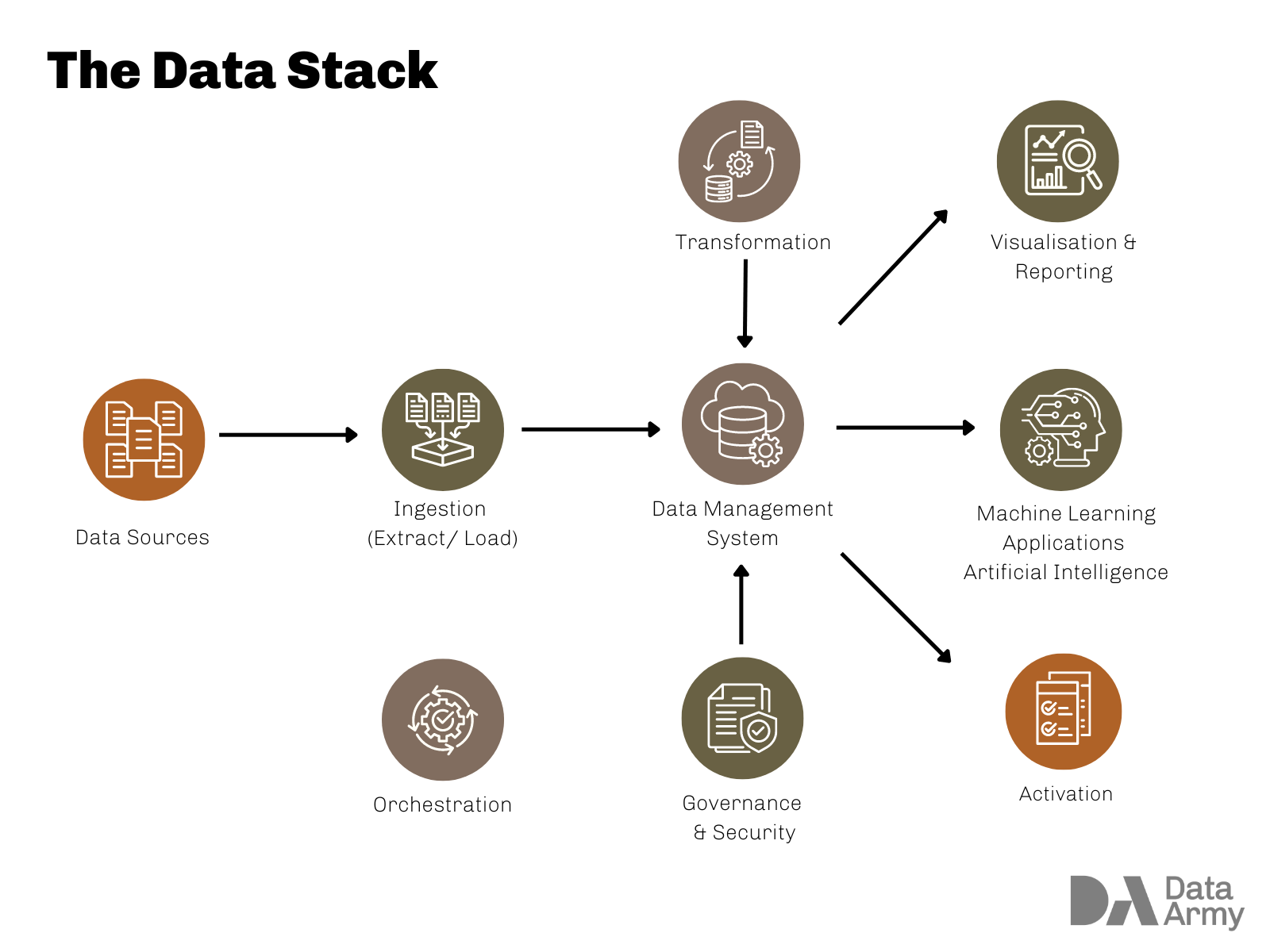

A data stack is the combination of tools, technologies and processes that are used to collect, store, process and analyse data.

It typically includes various layers that handle different aspects of data management and analytics, ensuring a smooth flow of data from raw collection to actionable insights.

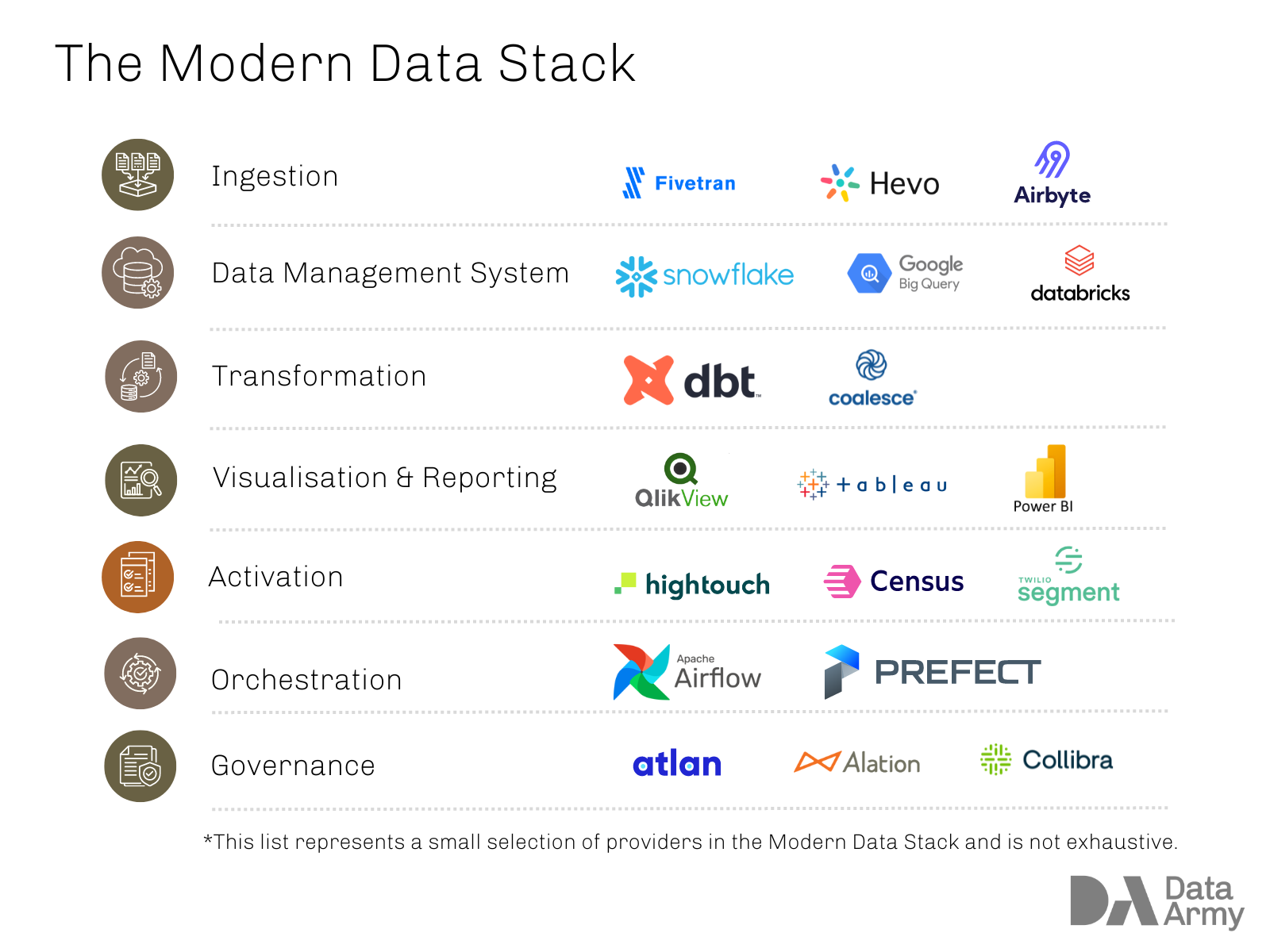

Here’s a breakdown of a typical data stack:

- Data Sources: The origins of the data, which can include databases, applications, APIs, sensors and other external data providers.

- Ingestion (Extract & Load): Tools and processes used to gather and import data from various sources into a central repository. Examples include extract & load tools, data pipelines and data integration platforms.

- Data Management System: Where the data is stored, such as data warehouses, data lakes or databases. These can be on-premise or cloud-based.

- Transformation (Data Processing): Tools and frameworks used to clean, transform and prepare data for analysis. This includes data wrangling, data cleaning, and batch or stream processing technologies.

- Visualisation & Reporting (including Data Analytics and Business Intelligence (BI)): Tools used to analyse and visualise data to derive insights. This includes analytics platforms, BI tools and reporting software.

- Machine Learning (ML) Applications Artificial Intelligence (AI): Tools and platforms for building, training, and deploying machine learning models and AI applications. This can include specific frameworks, libraries and cloud-based AI services.

- Orchestration: The coordination and automation of data workflows and processes. Tools for scheduling, monitoring, and managing data pipelines fall into this category.

- Governance and Security: Processes and tools to ensure data quality, integrity, privacy and compliance with regulations. This includes data cataloging, metadata management, and access control mechanisms.

A well-designed data stack allows organisations to efficiently manage their data lifecycle, from collection and storage to analysis and decision-making.

Most importantly, an effective data stack enables them to uncover deep insights in the data, fuel their AI and ML strategies.